Queues in System Design Highlighted as Key to Scalability and Reliability

Rising Importance of Message Queues

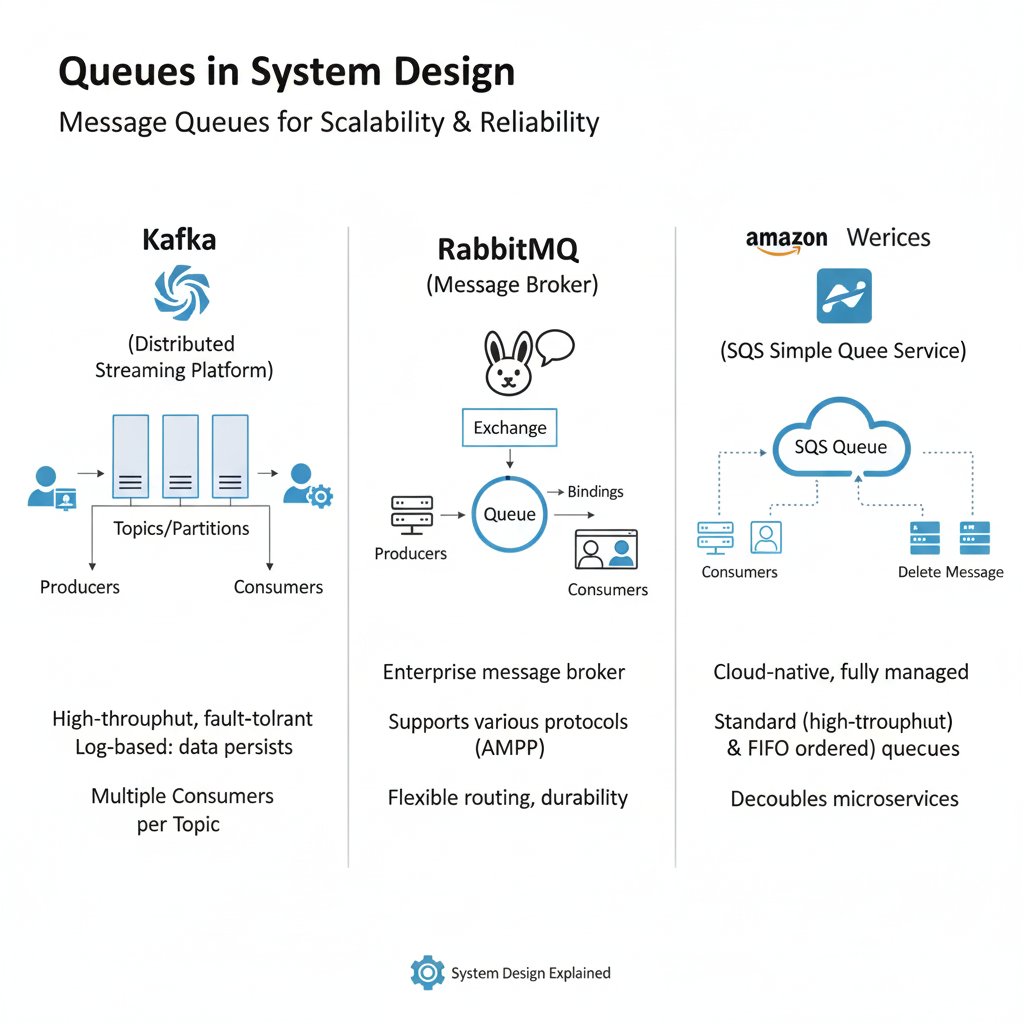

In an era where businesses increasingly operate at global scale and applications must process data in real time, message queues have emerged as a critical component of modern system design. By decoupling components, balancing workloads, and maintaining reliability even under heavy demand, these technologies provide the backbone for scalable, distributed architectures across industries. The three most widely discussed solutions—Apache Kafka, RabbitMQ, and Amazon Simple Queue Service (SQS)—each bring distinct design philosophies and strengths, shaping how enterprises handle messaging, streaming, and synchronization.

While each system is tailored to specific use cases, the common thread is clear: effective messaging queues are vital for ensuring that systems continue to function smoothly even under massive load or sudden spikes in activity. From financial markets and social networks to retail and logistics, these tools now play roles once handled by less adaptable, tightly coupled architectures.

What Message Queues Solve

At their core, message queues act as intermediaries, allowing applications to communicate asynchronously. Instead of services directly exchanging information in real time—which creates fragility and bottlenecks—messages are stored, delivered, and processed in a controlled manner. This design greatly enhances fault tolerance. If one component of the system fails, the queued messages remain intact, ready to be processed once services are restored.

This principle has historical precedent. Early computing systems often relied on tightly coupled architectures where a single failure could bring down entire workflows. As web applications scaled in the late 1990s and early 2000s, businesses increasingly turned to distributed systems, where message queues and brokers allowed for loose coupling and more resilient communication.

Today’s top message queue solutions continue this tradition but adapt it to the realities of global cloud infrastructure, microservices, and data streaming at terabyte scales.

Kafka: Distributed Streaming at Scale

Apache Kafka, originally developed at LinkedIn in 2011, has grown into one of the most widely used distributed event-streaming platforms in the world. Kafka is based on a log-structured architecture where events are written once and read by multiple consumers as needed. This design provides extraordinary throughput, making it particularly well-suited for real-time analytics, monitoring, and event-driven applications.

Kafka’s durability lies in its replication model. Messages are persisted on disk and replicated across brokers in a cluster, ensuring fault tolerance even in the event of hardware failures. The platform’s ability to handle high-volume, high-velocity data streams has made it central to industries such as finance, telecommunications, and media.

For instance, LinkedIn itself uses Kafka extensively to process user activity streams and deliver personalized content. Similarly, companies like Netflix and Uber employ Kafka to power real-time data pipelines that track customer interactions and internal system health, enabling quick responses to dynamic market demands.

RabbitMQ: Flexible Routing and Enterprise Messaging

RabbitMQ, first released in 2007, focuses on reliability and enterprise-grade messaging capabilities. Unlike Kafka, which was designed primarily for high-throughput event streaming, RabbitMQ excels at message delivery guarantees, varied routing patterns, and protocol support. Based on the Advanced Message Queuing Protocol (AMQP), RabbitMQ allows applications to publish messages that can be flexibly routed to specific queues according to predefined rules.

This flexibility makes RabbitMQ indispensable for traditional business applications, such as order and invoice processing in e-commerce, task scheduling in enterprise systems, and workflows requiring complex, multi-step orchestration.

Durability is supported through message persistence and acknowledgments, ensuring that critical business transactions are never lost. Companies dealing with sensitive, transactional workloads often rely on RabbitMQ precisely because of its ability to adapt to diverse routing needs while maintaining stable performance.

Amazon SQS: Cloud-Native and Fully Managed

In contrast to open-source, self-managed brokers like Kafka and RabbitMQ, Amazon Simple Queue Service (SQS) offers a fully managed, cloud-native option. Introduced in 2004, SQS was one of the earliest services in Amazon Web Services’ (AWS) portfolio and remains a cornerstone of its cloud ecosystem.

SQS abstracts away infrastructure concerns, automatically scaling to handle virtually any volume of messages without management overhead. Two queue types make it versatile for developers: Standard Queues, which prioritize high throughput, and FIFO (First-In-First-Out) Queues, which ensure strict ordering and exactly-once processing.

This managed approach has proven invaluable for companies building applications entirely within the AWS ecosystem. Businesses can decouple microservices without worrying about maintaining message broker infrastructure, integrating SQS directly with services like AWS Lambda, EC2, and DynamoDB. Organizations ranging from startups to multinational corporations use SQS for tasks like processing user requests, handling background jobs, and connecting disparate application components.

Comparing Performance and Use Cases

Although Kafka, RabbitMQ, and SQS are often mentioned together, their differences highlight the diversity of modern system design approaches. Kafka’s value lies in real-time event streaming across massive datasets, making it ideal for analytics pipelines and monitoring systems. RabbitMQ prioritizes reliability in transactional messaging, making it a natural choice for enterprise back-office applications. SQS, meanwhile, delivers cloud-native simplicity, empowering developers to scale applications quickly without operational complexity.

Regional adoption patterns also reflect these distinctions. In the United States, where cloud adoption is particularly high, SQS has become a mainstay for AWS-based businesses. In Europe, enterprise users often favor RabbitMQ due to regulatory contexts requiring fine-grained control of transaction flows. In Asia, where social media and e-commerce giants process staggering amounts of user interaction data, Kafka’s distributed event-streaming capacity has fueled widespread deployment.

Economic Impact of Reliable Queuing

The economic importance of these technologies extends far beyond the datacenter. By ensuring reliability and reducing downtime, businesses save millions in avoided outages and service disruptions. Studies in financial services reveal that delays of even a few milliseconds can amount to significant monetary losses, underscoring why technologies like Kafka and RabbitMQ have become integral to critical trading platforms.

At the same time, the operational efficiencies produced by cloud-based solutions like SQS reduce the need for dedicated infrastructure teams, offering cost savings for small and medium enterprises. This democratization of reliable queuing technology allows startups to scale at unprecedented rates, challenging incumbents by reducing technical barriers to entry.

Historical Context and Technological Evolution

Message queuing systems are descendants of earlier enterprise integration patterns from the 1990s, when middleware solutions like IBM MQ laid the groundwork for asynchronous communication. While those early solutions were effective in mainframe and enterprise environments, they were never designed to handle the real-time, internet-scale demands of today’s digital economy. Modern solutions emerged to fill this gap, enabling systems to scale horizontally, replicate globally, and support complex orchestration with relatively simple configurations.

Kafka transformed the message broker model by treating data as a continuous, immutable log stream rather than transient point-to-point exchanges. RabbitMQ refined the transactional aspects of messaging while maintaining flexibility to support diverse business requirements. SQS reimagined the entire concept as a managed service, providing simplicity over configurability. Together, these innovations illustrate the evolutionary path from heavyweight middleware to today’s highly elastic, distributed, and cloud-native platforms.

Future Outlook

Experts anticipate continued growth in the utilization of message queues, driven by the expansion of IoT devices, AI-powered analytics, and 5G-enabled applications. As data volumes and demands for real-time processing escalate, the value of decoupled, fault-tolerant architectures will only increase. Hybrid approaches may also gain prominence, with companies deploying Kafka for high-volume streams, RabbitMQ for structured workloads, and SQS for quick scalability in the cloud.

This convergence reflects a broader reality: no single solution dominates every scenario. The strongest system designs will likely integrate multiple technologies, selecting queues not just on theoretical performance but on business-specific considerations such as cost, regulatory requirements, and ecosystem compatibility.

Conclusion

Message queues have quietly become foundational to the digital economy, underpinning services that billions rely upon every day. With Kafka, RabbitMQ, and Amazon SQS shaping how data flows across industries, organizations gain the tools to build systems that are not merely functional, but resilient, adaptable, and prepared for the unpredictable demands of a connected world. As distributed computing continues to evolve, queues remain not just a technical mechanism but a strategic asset powering modern innovation.